Integration of AI and Advanced Decision Support in Military Triage

The transition to Large-Scale Combat Operations (LSCO) and the accompanying potential for Mass Casualty Incidents (MCIs) requires a fundamental re-evaluation of military medical doctrine. Current Tactical Combat Casualty Care (TCCC) and Tactical Emergency Casualty Care (TECC) frameworks.

The transition to Large-Scale Combat Operations (LSCO) and the accompanying potential for Mass Casualty Incidents (MCIs) requires a fundamental re-evaluation of military medical doctrine. Current Tactical Combat Casualty Care (TCCC) and Tactical Emergency Casualty Care (TECC) frameworks, while effective at the individual intervention level (e.g., tourniquets, chest seals) , face systemic limitations when deployed in dynamic, complex, and high-volume environments. The operational imperative is no longer merely optimizing life-saving interventions (LSIs), but drastically reducing the cognitive and temporal burden placed upon frontline medical personnel.

Inherent Limitations of Conventional Triage Protocols (TCCC/TECC)

Traditional, structured triage methodologies are often functionally abandoned during the chaos of an MCI, regardless of whether the protocol is START, SALT, or RAMP. Data indicate a significant gulf between established doctrine and real-world execution: a structured survey noted that formal triage systems were utilized in only 16% of actual MCI events. Furthermore, retrospective reviews of 29 military MCIs, involving over 1,000 casualties, revealed that no formal algorithm was employed. Instead, responders resorted to a binary classification system: sorting casualties into "dying now or dying later / urgent or non-urgent".

This systemic failure to follow agency mass casualty plans is not necessarily a reflection of insufficient training, but rather a consequence of the overwhelming cognitive load imposed by time pressure, scene size, resource constraints, and safety concerns. Structured triage requires 30–60 seconds per injured person to determine the appropriate category, representing an unacceptable time expenditure when the casualty count is high. This constraint forces human decision-makers to rapidly triage based on instinct rather than system compliance. Therefore, the doctrinal crisis in triage is primarily a function of cognitive overload, not a deficiency in the core clinical principles. The immediate value of advanced decision support (ADS) is thus the reduction of this cognitive burden, allowing medics to shift their focus back to core LSI protocols like MARCH.

The Inflexibility of Expert-Derived Systems

Existing triage systems struggle because they attempt to overlay an "ordered, inflexible and process driven" mechanism onto an environment that is "dynamic, complex, and uncontrolled". This inflexibility leads to critical failures in two major areas. First, current criteria often omit rapid assessments for crucial injuries, such as truncal penetrating trauma. Second, the systems possess limited accuracy and reliability in sorting patients. This inaccuracy often results in "over-triage," where a casualty is placed in a higher acuity group than necessary. Over-triage is not merely a classification error; it represents a waste of time and scarce medical resources that could otherwise be allocated to more critically injured patients. The inherent limitations of these expert opinion-derived tools necessitate the adoption of data-driven methodologies that adapt rapidly to specific injury profiles and resource availability.

The Time-Critical Trauma Threshold

The efficacy of battlefield triage must be measured against the known limits of survivability. Expert consensus statements have established an unequivocal medical requirement: there must be an upper limit of 2 hours from the point of wounding to surgical intervention (definitive surgical capability) to prevent avoidable death in modern warfare. This stringent timeline reinforces the tactical urgency of the "Golden Hour" concept, despite advancements like pre-hospital haemostatic resuscitation provided by enhanced Medical Emergency Response Teams (MERTe).

The challenge of achieving this 2-hour window elevates medical triage from a tactical concern to a strategic logistical requirement. Since manual, structured triage protocols consume critical time and lead to allocation errors , automated, predictive systems become an operational necessity to meet this timeline imperative. AI must not only triage accurately but also manage the logistical pipeline—balancing patient acuity with specialized care needs (e.g., immediate transfer to a regional neurosurgical center versus an operating theatre). Without predictive analytics to guarantee rapid and correct movement, the 2-hour threshold remains practically unattainable during high-volume conflicts.

Advanced Decision Support (ADS) via Predictive Analytics and AI

The integration of artificial intelligence and machine learning (AI/ML) represents a paradigm shift from static, diagnostic triage to continuous, anticipatory medical prioritization. The empirical data strongly support the superior performance and consistency of algorithmic decision support in high-pressure medical environments.

Empirical Superiority of Machine Learning Triage Models

Research utilizing trauma registries demonstrates that ML models developed using high-fidelity data outperform established, expert-derived algorithms. A study focused on predicting Priority 1 (P1) patients—those requiring time-critical LSIs—benchmarked several existing tools against ML models trained on the UK Trauma Audit and Research Network (TARN) registry. The best existing expert tool tested, the Battlefield Casualty Drills (BCD) Triage Sieve, showed a sensitivity of 68.2% and an Area Under the Curve (AUC) of 0.688.

In contrast, a simple ML-derived decision tree model, utilizing only three predictive variables (inability to breathe spontaneously, presence of chest injury, and mental status), achieved demonstrably superior characteristics: a sensitivity of 73.0% and an AUC of 0.782. This model formed the candidate primary triage tool. Furthermore, a proposed secondary tool, applicable via a portable device, included a fourth variable—injury mechanism—and showed exceptional performance during external validation against military trauma data from the Joint Theatre Trauma Registry (JTTR), achieving a sensitivity of 97.6% and an AUC of 0.778.

The superior performance of these ML models signals a fundamental change in prioritization methodology. Traditional triage systems classify the patient's immediate state (Immediate, Delayed, Minimal, Expectant - IDME).7 AI, conversely, is focused on anticipating future clinical deterioration—specifically, predicting the need for life-saving interventions (LSIs).8 This capability allows for anticipatory decisions, critical for prolonged field care (PFC) scenarios where evacuation is delayed.2 The inclusion of the injury mechanism in the high-performing secondary model further reinforces that simple physiological readings alone are insufficient; context remains a crucial predictive factor that must be reliably captured and fused with physiological data.

AI for Resource Allocation and MCI Management

Beyond individual casualty assessment, AI systems are proving invaluable for optimizing logistics and macro-level decision-making during mass casualty events. Deep reinforcement learning-based AI agents have been developed and validated to optimize patient transfer decisions during simulated MCIs.5 These systems balance complex variables instantly, including patient acuity levels, specialized care requirements, hospital capacities, and transport logistics.

In controlled user studies, increasing AI involvement significantly improved decision quality and consistency. The AI agent demonstrated performance superior to that of trauma surgeons ($p < 0.001$), enabling non-experts to achieve expert-level performance when assisted.5 Functionally, these AI systems provide real-time data analysis, predictive analytics to forecast potential health issues, and automated alert systems, thereby reducing reaction times and enhancing survival rates.

C. Engineering Trust: The DARPA In the Moment (ITM) Program

Recognizing that the successful deployment of AI hinges on human trust, the Defense Advanced Research Projects Agency (DARPA) launched the In the Moment (ITM) program.10 This program aims to create a foundational framework for trusted algorithmic decision-making in challenging domains such as medical triage, where uncertainty and conflicting values are common.10

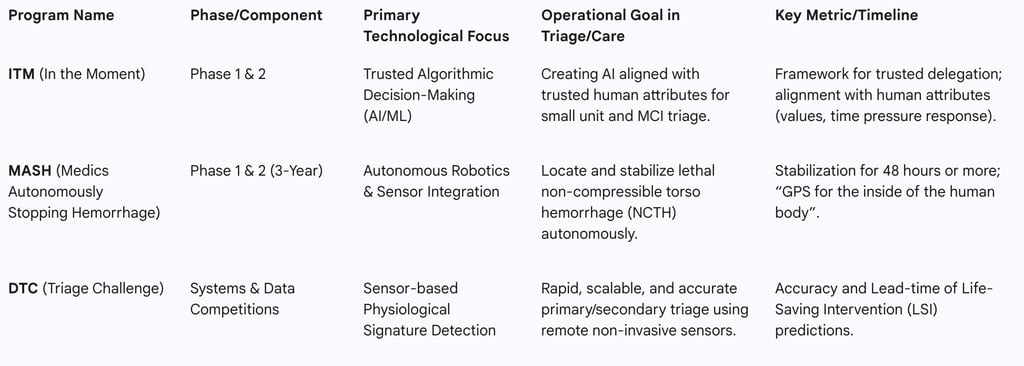

ITM seeks to develop algorithms that align with "key attributes that are aligned with trusted humans," including how the algorithm evaluates a situation, relies on domain knowledge, responds to time pressures, and uses principles or values to prioritize care.10 The program is structured across two phases to manage complexity: Phase 1 addresses triage for small military units in austere environments, while Phase 2 scales up to complex mass casualty events.10

Crucially, the ITM program focuses on establishing a delegation framework—understanding how human attributes lead to the trusted delegation of life-and-death decision-making.10 ITM advances are expected to support both fully automated and semi-automated decision-making, where the human medic retains the option to override the algorithm.10 This emphasis on human-AI balance is essential for preventing automation bias and ensuring that the system is accepted by frontline operators.11 The program acknowledges that difficult triage decisions often lack a single "correct" answer and rely on ambiguous judgment, suggesting that successful military AI will likely require hybrid approaches, such as Neuro-Symbolic AI, which combine predictive power with explainability to optimize resource allocation.

Data Fusion and Remote Sensing: The Foundation for Continuous Triage

The success of AI-driven triage is directly proportional to the quality, continuity, and integrity of the physiological data input. AI algorithms require high-fidelity, real-time input collected remotely from the point of injury, especially in environments where manual monitoring is impractical or unsafe.

Requirements for Continuous, Non-Invasive Data Collection

For battlefield application, wearable health sensors must meet stringent military requirements, including robustness against environmental hazards (water-resistant, durable against crushing forces), extended battery life, and compatibility with military communications networks.13 Medics have historically prioritized measurements of Blood Pressure (BP), Heart Rate (HR), and Oxygen Saturation ($\text{SpO}_{2}$) for triage, recognizing that hemorrhage remains the leading preventable cause of death in major trauma.13

Recent technological advancements address the challenge of continuous, cuff-less BP monitoring, which is critical for hemorrhage assessment. Devices like the Sempulse Halo monitor provide continuous, non-invasive monitoring of clinical-grade pulse oximetry, cuff-less blood pressure (NIBP), heart rate, and respiratory rate.15 This remote, continuous collection of key physiological parameters enables sophisticated telehealth in the field.16

Furthermore, data fusion techniques are employed to calculate B-level physiological parameters (e.g., blood pressure, respiration rate) by combining information from multiple biosensors.18 This multi-sensor approach is necessary to monitor the subtle physiological indicators of impending crisis. For instance, continuous monitoring of the arterial pressure waveform morphology allows for the assessment of Compensatory Reserve Measurement (CRM) outside of traditional clinical environments.14 CRM is a sensitive indicator of hemorrhage and provides an anticipatory warning far earlier than traditional vital sign destabilization, thereby transforming triage from a static classification into a continuous, adaptive process capable of issuing LSI warnings proactively.8

Communication Challenges in Degraded Environments

The effectiveness of continuous triage systems is wholly dependent on the reliable transmission of data from the sensor to the decision support system. This necessity faces significant hurdles in the context of LSCO, where military operations increasingly occur in communications-degraded or -denied environments.19

Forward-deployed decision-makers, including medical personnel, must function effectively under conditions where command and control (C2) links are contested.20 This requires investing in robust, low-bandwidth communication protocols optimized for physiological data transmission. If AI relies on continuous, high-fidelity data 15, the reliability and integrity of the communications link become a priority equivalent to weapons effectiveness. Medical data integrity is effectively a combat priority.

Compounding the technological challenge is the risk of cognitive exhaustion for human operators. Rapidly changing data presentations and constant chatter on communication links can lead to information overload, degrading a medic’s ability to process and act upon critical information.21 AI decision support must therefore be engineered to synthesize, prioritize, and present only actionable information, rather than overwhelming the human user with raw data streams. Telemedicine links, such as the VERTI application, already integrate medical data exchange with existing tactical radio networks used for command and control of unmanned systems, establishing a precedent for resilient data architecture.

Robotics and Autonomy: Transforming Medical Logistics and Intervention

Autonomous robotic systems are rapidly moving from theoretical concepts to deployable assets, fundamentally altering the operational doctrine for casualty recovery and medical intervention. These systems function as autonomous extensions of the medical team, drastically reducing risk in the most dangerous phases of combat care.

Autonomous Casualty Evacuation (CASEVAC)

Autonomous CASEVAC systems—primarily Unmanned Ground Vehicles (UGVs) and aerial drones—are becoming essential for force protection in high-intensity conflicts.23 This necessity is driven by the reality that adversaries frequently target medical vehicles despite clear markings, rendering human-crewed evacuation inherently perilous.24

UGVs and aerial drones offer a solution, allowing casualty recovery operations to proceed without exposing medical teams to direct fire.24 These vehicles can be rapidly dispatched, remotely steered with overwatch from an aerial drone, and autonomously navigate complex terrain, mines, and debris to reach the casualty collection point.25 This ability to clear the battlefield and ensure resupply under contested logistics directly supports the Army Health System imperatives for LSCO.25 Furthermore, the speed and efficiency of autonomous evacuation act as a critical force multiplier by facilitating the rapid return of soldiers to the fight.

During autonomous transport, telemedicine integration is crucial. Applications like VERTI enable the real-time flow of medical information from patient monitors secured on the UGV or Unmanned Aerial System (UAS) to a medical provider at the receiving treatment facility. This capability supports monitored CASEVAC missions even when dedicated assets are denied entry due to weather, terrain, or enemy activity.

Autonomous Field Stabilization and Intervention (DARPA MASH)

The most ambitious step toward autonomous field care is the DARPA Medics Autonomously Stopping Hemorrhage (MASH) program, which targets Non-Compressible Torso Hemorrhage (NCTH). Uncontrolled internal bleeding in the torso is one of the most lethal threats in combat, and MASH aims to develop autonomous capabilities to find and stop this bleeding without requiring a surgeon.

The program’s objective is revolutionary: to develop autonomous systems capable of stabilizing injured warfighters for 48 hours or more, thereby providing crucial time for evacuation to definitive surgical care.27 This long stabilization window fundamentally alters operational planning, shifting the medical timeline from the traditional "Golden Hour" concept toward a "Platinum 48 Hours" for Prolonged Field Care (PFC).

The technical challenge is immense, requiring the creation of a "GPS for the inside of the human body" to precisely locate the source of the bleed among the complex landscape of organs and blood. The three-year MASH program is structured in two phases: Phase 1 focuses on integrating sensors with robotic systems to locate the bleeding; Phase 2 focuses on developing the software and autonomy necessary to stop it. This effort goes beyond deploying existing technology; it involves developing new trauma procedures specifically designed for robotic intervention, demonstrating an inversion of the traditional doctrine development model, where technology itself is now driving the revision of field care protocols.

DARPA Programs Driving Autonomous Battlefield Care

Policy, Regulatory, and Ethical Frontiers for Autonomous Medical Systems

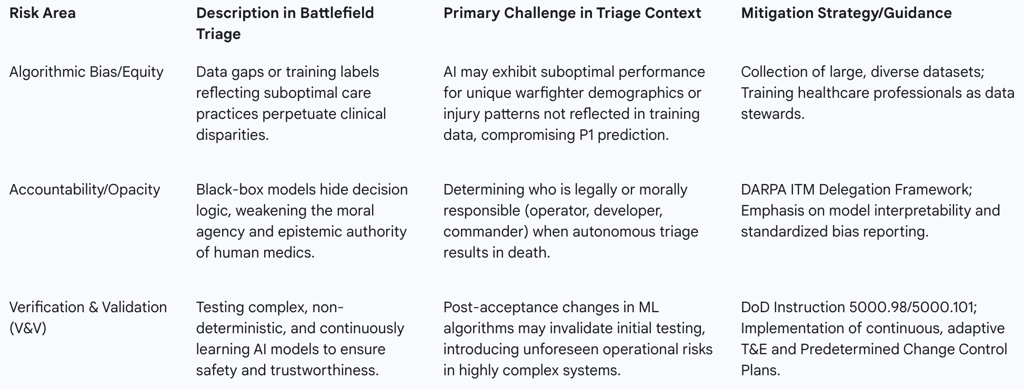

The deployment of autonomous systems in military medical contexts, particularly triage, introduces complex ethical, legal, and operational challenges that must be addressed through robust governance and regulatory frameworks. The potential for AI to influence life-or-death decisions necessitates a profound scrutiny of accountability and bias.

Accountability and the Responsibility Gap

A significant challenge in delegating life-critical decisions to autonomous systems lies in the determination of responsibility when errors occur. Autonomous systems, while possessing significant decision-making power, currently lack the capacity to make genuine ethical choices or explore alternative courses of action outside their programming parameters. In a triage scenario, where misprioritization can directly lead to preventable death, the legal and moral liability remains ambiguous: does it rest with the developer, the commanding officer, or the human medic who chose to follow the algorithm’s recommendation?

Furthermore, the introduction of advanced technological mediation can weaken the moral agency of human operators. Automation bias—the overreliance on machine recommendations—can diminish the human capacity for ethical decision-making, especially under the high stress of battle. The inherent opacity of highly sophisticated algorithms further complicates matters, making it difficult to assess the reliability of a given triage prediction and undermining the epistemic authority of the clinician.

Algorithmic Bias and Data Integrity as an Ethical Imperative

The most critical operational risk associated with medical AI is the inherent bias embedded in the training data. Biases can compound throughout the AI lifecycle—from data feature selection and labeling to deployment—leading to substandard clinical decisions and the perpetuation of longstanding healthcare disparities.

In military triage, this translates to specific risks: if the data used to train the AI lacks sufficient sample sizes for certain patient groups (e.g., unique injury patterns or warfighter demographics), the algorithm may perform suboptimally or underestimate severity for those subgroups. Moreover, if the expertly annotated labels used to train supervised models reflect implicit cognitive biases or even substandard care practices prevalent in the source data, the AI will automate and exacerbate these moral and medical failings. The lack of structured training for military healthcare professionals to act as data stewards—annotating and structuring data for ethical AI development—represents a critical vulnerability that links tactical data collection failures directly to strategic ethical risk.

Key Ethical and Policy Risks of AI in Military Triage

Regulatory Frameworks and Testing Standards

The military has established rigorous policies for autonomous systems, such as Department of Defense Directive (DoDD) 3000.09, which mandates rigorous hardware, software Verification and Validation (V&V) and realistic operational Test and Evaluation (T&E). However, autonomous systems, especially those using machine learning, exhibit probabilistic behavior and can learn and change outcomes after deployment. This continuous learning creates a regulatory catch-22: the very characteristic that makes AI valuable—its ability to adapt—is what invalidates the foundational V&V conducted before deployment.

To mitigate this, the DoD, through guidance such as DoDM 5000.101, requires improved test planning, rigor, and the characterization of system performance across the acquisition lifecycle. T&E must assess the test and training datasets and ensure compliance with the DoD’s 5 AI Ethical Principles. The regulatory solution is not to prohibit learning, but to regulate the process of change itself. This aligns with the Food and Drug Administration's (FDA) approach for commercial AI medical devices, which is shifting toward requiring a Predetermined Change Control Plan (PCCP) upfront. This allows for planned modifications to the AI/ML model to occur without requiring additional marketing submissions for every change, provided the modifications adhere to the PCCP. Adopting this "change control" philosophy in the military context necessitates that continuous, post-acceptance testing becomes a standard operational requirement, moving V&V onto the operational network.

Internationally, frameworks are evolving to guide responsible deployment. The Political Declaration on Responsible Military Use of AI and Autonomy (REAIM 2023) provides a normative basis for developing and deploying military AI in compliance with International Humanitarian Law (IHL). Simultaneously, NATO's Chiefs of Military Medical Services (COMEDS) is steering the development of new medical support concepts, emphasizing the need to lead by example in the responsible development of AI capabilities (PRUs—Principles of Responsible Use).

Strategic Recommendations and Future Outlook

The integration of AI, data analytics, and robotics into military triage is not a technological option but a strategic imperative driven by the critical 2-hour surgical timeline and the necessity of force protection in contested environments. To successfully transition from theoretical development to operational reality, the following recommendations must be implemented.

A. Recommendations for Data Governance and Training

Mandate Data Stewardship Training: The MHS must institute mandatory, recurrent training for all medical personnel, from tactical combat lifesavers to surgeons, focusing on the documentation, annotation, and structuring of clinical and operational data. This formalizes the understanding that data collection is an ethical responsibility, essential for mitigating algorithmic bias and ensuring high-fidelity input for AI development.

Establish Continuous Learning Infrastructure: Invest immediately in the digital health infrastructure required to implement a learning health-care system. This includes utilizing Casualty Digital Twinning (CDTs) to rapidly influence combat casualty care guidelines based on predictive outcomes, ensuring that field data continually refines both AI models and standard medical protocols.

Prioritize Resilient Data Architecture: Commit strategic investment to develop and deploy robust, low-bandwidth communication protocols optimized for physiological data transmission in contested, degraded environments. The seamless medical data exchange demonstrated in telemedicine platforms must be hardened to ensure that the AI/ADS system remains functional when tactical communications are denied.

B. Recommendations for Doctrine and Policy Integration

Revise Triage Doctrine to Support Continuous Triage: Formally update TCCC/TECC guidelines to move beyond static, point-in-time classification (IDME). Adopt a two-tiered system: a rapid, AI-driven primary screening (utilizing ML models focused on LSI prediction) followed by a human-validated, AI-assisted secondary prioritization. This doctrinal shift leverages AI for cognitive offload and anticipatory warning.

Formalize Autonomous CASEVAC as Force Protection: Integrate autonomous platforms (UGVs and UAS) into standard medical rules of engagement (ROE) as essential, non-negotiable force protection assets for casualty recovery. This must move beyond experimental use to mainstream deployment, recognizing the adversary's tactic of targeting medical teams.

Adopt Adaptive Testing Standards: Align DoD T&E requirements (DoDM 5000.101) with the dynamic nature of learning systems. Require Predetermined Change Control Plans (PCCPs) for all deployed medical AI, mandating continuous V&V post-acceptance to manage the regulatory risk introduced by self-learning algorithms.

Roadmap for Human-AI Collaboration in Battlefield Triage

The strategic roadmap for integrating advanced decision support must proceed in distinct, sequenced phases:

Near-Term (0-3 Years): Focus on maximizing data collection and human cognitive assistance. Deploy robust, wearable, continuous physiological sensors capable of cuff-less BP monitoring and CRM tracking. Integrate initial decision-support AI (DTC models) for rapid primary triage in austere environments. The primary focus will be achieving Phase 1 integration and alignment under the DARPA ITM program.

Mid-Term (3-7 Years): Operationalize UGVs for logistics, resupply, and autonomous CASEVAC under fire. Integrate AI-driven patient transfer optimization for managing MCI logistics, balancing acuity and hospital capacity. Begin formal doctrinal updates reflecting the delegation and trust frameworks established by ITM Phase 2.

Long-Term (7+ Years): Integrate autonomous robotic stabilization capabilities developed through the MASH program into forward medical facilities. This will cement the doctrinal shift toward prolonged autonomous stabilization, enabling medical resiliency deep within denied areas. Achieve widespread deployment of adaptive, self-learning medical systems governed by transparent V&V and strict regulatory adherence to IHL and ethical principles.