Implementation and Governance Frameworks for AI-Assisted Emergency Department Triage Systems

The technical superiority of ML models is contingent upon robust governance frameworks. Successful clinical deployment requires addressing critical non-technical challenges, including mitigating algorithmic bias rooted in historical data, ensuring transparency through Explainable AI (XAI) to foster clinician trust

The escalating global crisis of Emergency Department (ED) overcrowding and the inherent limitations of conventional, subjective triage methods (e.g., Emergency Severity Index, Canadian Triage and Acuity Scale) necessitate transformative solutions. The integration of artificial intelligence (AI) and machine learning (ML) models into acute care settings has emerged as a crucial strategy, offering superior predictive capabilities compared to existing systems.

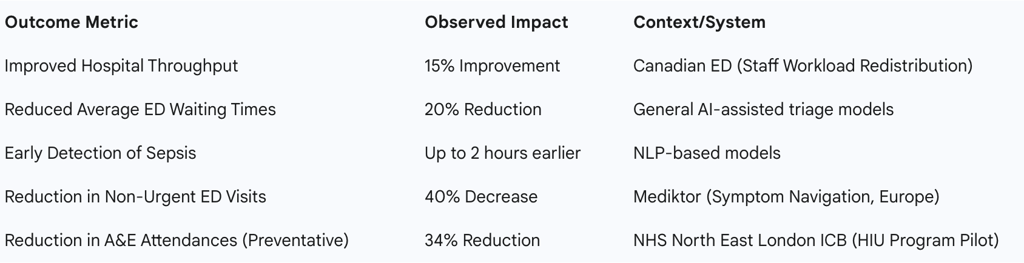

The documented real-world implementation stories reveal a bifurcation of successful AI deployment strategies in emergency medicine. The first pathway focuses on Acute Triage Optimization within the ED workflow, achieving demonstrable operational efficiencies such as a 15% improvement in hospital throughput following workload redistribution in a Canadian ED and a 20% reduction in average ED waiting times. The second, and arguably higher-impact, pathway focuses on Systemic Demand Management, employing preventative triage to mitigate input volume. Proactive identification of High Intensity Users (HIU) within the UK’s National Health Service (NHS) has demonstrated profound systemic benefits, including a 34% reduction in emergency attendances in pilot regions.

Crucially, the technical superiority of ML models is contingent upon robust governance frameworks. Successful clinical deployment requires addressing critical non-technical challenges, including mitigating algorithmic bias rooted in historical data, ensuring transparency through Explainable AI (XAI) to foster clinician trust, and establishing clear legal and ethical liability frameworks for decisions made in high-stakes environments.

Foundational Evidence: Predictive Superiority and Clinical Efficacy

Machine Learning Models vs. Conventional Scales

Machine learning models consistently exhibit superior discrimination abilities when compared to conventional, fixed-parameter triage scores for the screening of high-acuity risks. This predictive edge is evident in areas such as identifying patients at risk of hospitalization, Intensive Care Unit (ICU) admission, mortality, and the combined critical care outcome. The superiority stems from AI's capacity to ingest and process vast, multidimensional datasets that traditional scales cannot incorporate effectively.

ML models leverage diverse data inputs, including demographics, real-time monitoring devices, vital signs, and, critically, unstructured data like physician notes and clinical narratives. Natural Language Processing (NLP) techniques, often utilizing models such as ClinicalBERT for text analysis, transform this unstructured information into meaningful features (text embeddings), supporting more nuanced risk assessment. The synthesis of these complex data points allows the AI to provide a more accurate and comprehensive risk profile than traditional subjective or rule-based assessments, which often struggle with variability and high patient volumes.

Quantifying Clinical Impact: Earlier Intervention and Risk Mitigation

The integration of AI into the triage process yields tangible enhancements in predictive accuracy, risk assessment, and disease identification. For time-critical conditions, this enhancement translates directly into improved patient outcomes. For instance, studies have shown that NLP-based models were capable of detecting sepsis up to 2 hours earlier than human clinicians. This capability is critical because earlier diagnosis and intervention for conditions like sepsis directly improve survival rates, transforming AI from a purely operational tool into a life-saving clinical instrument.

Furthermore, AI algorithms, leveraging clinical data and text embeddings, predict the need for ICU admissions more accurately than traditional scales. This ability to anticipate high-resource utilization requirements supports faster and more precise ED decision-making, ensuring that critically ill patients are prioritized and allocated resources without unnecessary delay.

Operational Enhancements in the Acute Setting

Enhanced predictive accuracy serves as the primary driver for operational improvements within the ED. If an AI system can reliably and rapidly stratify risk, it enables the clinical team to bypass initial processing bottlenecks and expedite resource allocation. Documented operational benefits include a reported 20% reduction in average ED waiting times following the integration of AI-assisted triage systems.

Beyond waiting times, AI contributes to substantial improvements in hospital throughput. A specific case involving a Canadian ED reported that hospital throughput improved by 15% after AI triage models were deployed to redistribute staff workload. This demonstrates that the efficiency gain is not merely a statistical artifact but a measurable consequence of optimizing the utilization of human capital, achieved by providing clinicians with superior, automated prioritization data. The operational gains are thus a downstream effect of clinical efficacy: by enabling earlier, more accurate diagnoses and resource planning, AI maximizes the efficiency of the clinical workforce.

Global Implementation Case Studies: Acute Risk Stratification and Workflow Integration

Case Study: The Score for Emergency Risk Prediction (SERP) in Singapore

The development and planned implementation of the Score for Emergency Risk Prediction (SERP) in Singapore provides a detailed look at the complexities of deploying high-stakes AI tools. SERP is a machine learning tool designed to estimate mortality risk upon presentation at the ED, demonstrating strong predictive accuracy in retrospective studies.

A significant hurdle emerged during the planning phase for real-world capabilities testing. Researchers concluded that a traditional pre-implementation randomized controlled trial (RCT) would not be ethically or practically feasible. The core difficulty lies in how the AI tool interacts with and influences clinical judgment; blinding the clinical staff to a mortality risk score that could potentially change patient outcomes proved too complex. This conflict between robust validation methodology and clinical urgency led to the adoption of an ethically appropriate implementation strategy aligned with the Learning Health Systems (LHS) framework. The LHS approach emphasizes ongoing evaluation and continuous learning during deployment, ensuring safety and efficacy are maintained and improved over time, recognizing that the AI system fundamentally alters the decision-making process in real-time and cannot be easily isolated as a standard drug intervention.

Case Study: Symptom Navigation and Referral Optimization in Europe

In Europe, AI tools are heavily leveraged for decentralized triage and patient navigation, effectively diverting inappropriate demand away from acute ED services.

Mediktor: This platform utilizes a combination of Natural Language Processing (NLP), machine learning, and Bayesian networks to perform symptom assessment and guide patients to the most appropriate level of care based on urgency. This rerouting strategy has resulted in quantifiable demand reduction, including a documented 40% decrease in non-urgent emergency-room visits and a 54% reduction in overall doctor visits. Implementation at facilities like Hospital Universitari Arnau de Vilanova demonstrated the practical effectiveness of patient guidance, where 90.9% of patients followed the Mediktor recommendation when they were directed to a primary urgent care center instead of the hospital. This high patient compliance rate validates the utility of using AI for high-quality, pre-arrival patient navigation.

Visiba (Red Robin): This system uses voice-recognition software to route patients in urgent care, who then enter symptoms via an online link. A probability-based AI tool named Red Robin assesses the condition and its acuity, sharing this assessment with a clinician who makes the final decision. This application yielded significant operational gains: one study reported that Visiba led to 38% more cases being resolved without the need for an onward referral compared to traditional approaches. By acting as an effective filter for low-acuity cases, these systems reduce the unnecessary consumption of high-cost ED resources, demonstrating that AI is highly effective when deployed as an external gatekeeper rather than solely as an internal prioritizing mechanism.

Real-World Implementation Case Studies: Preventative Triage and Demand Management

While optimizing flow within the ED is critical, some of the most profound organizational impacts have been achieved through the preventative deployment of AI models designed to reduce systemic demand entirely.

The NHS High Intensity User (HIU) Services Initiative

The UK’s National Health Service (NHS) has adopted a strategic approach to use AI for predicting patients who are at high risk of becoming frequent users of emergency services—defined as attending A&E more than five times annually. This deployment shifts the clinical focus from reactive triage to proactive, preventative support designed to tackle the underlying social and medical determinants of frequent attendance, such as long-term conditions, poverty, and social isolation.

In North East London (NEL), the NHS launched a three-year program in collaboration with Health Navigator and UCLPartners. This initiative employs advanced AI screening technology that analyzes routinely collected hospital data to identify individuals requiring immediate preventative support. The AI identifies high-risk patients, particularly those with long-term conditions such as asthma or diabetes, enabling healthcare professionals to proactively reach out with personalized clinical coaching and self-management techniques before the patient reaches a crisis point.

The results supporting this implementation are substantial. Pilot data driving the initiative showed significant benefits, including a 34% reduction in emergency attendances and a 25% reduction in hospital bed days. Forecasting models for the NEL program anticipate a reduction of 13,000 annual A&E attendances and the saving of 26,673 unplanned bed days over three years. These figures illustrate that the organizational return on investment for AI triage is maximized when the technology is used to manage demand at the population health level, fundamentally reducing the input volume to the ED rather than marginally improving internal flow.

Systemic Impact Beyond the ED

The HIU services initiative has been rolled out to support over 125 emergency departments across England. The impact on individual patient care is significant; for example, South Tees Hospitals NHS Foundation Trust’s data-powered identification of HIU individuals enabled proactive support that helped them more than halve their visits to A&E, reducing annual attendances from 33 times per year.

This systematic effort to identify and resolve the root causes of repeated visits demonstrates the strategic use of predictive analytics as a public health tool, thereby alleviating the severe pressure faced by acute EDs globally.

Technical Architecture, Model Interpretability, and Data Requirements

Underlying Machine Learning Methodologies

AI-assisted triage systems rely on a robust hierarchy of machine learning methodologies. The common models employed include:

Supervised ML Models: These include classical approaches like Logistic Regression (CVLR), Support Vector Machines (SVM), Random Forest (RF), Decision Trees, and highly effective ensemble methods such as Extreme Gradient Boosting (XGBoost).1

Deep Learning (DL) Models: These are vital for processing complex, high-dimensional data. Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) handle sequential data, while transformer models are increasingly used for sophisticated Natural Language Processing tasks on clinical text.1

Reinforcement Learning (RL): This represents an emerging area focused on developing adaptive triage strategies that can refine their performance based on continuous feedback derived from long-term patient outcomes.

When predicting operational outcomes, such as prolonged wait times (categorized as $\geq 30$ minutes for ESI level 3 patients), multiple algorithms—CVLR, RF, XGBoost, Artificial Neural Networks (ANN), and SVM—demonstrated comparable performance.13 A critical finding is that when evaluating these models for clinical relevance, minimizing the False Negative Rate (FNR) is prioritized over maximizing generalized accuracy.13 This requirement reflects the high-stakes nature of ED triage: failure to identify a critical patient (an FNR) is ethically and clinically far more damaging than occasionally over-triaging a less acute patient (a False Positive Rate).

The Critical Role of Explainable AI (XAI)

For AI models to transition from research tools to trusted clinical decision support systems, they must address the "black box" problem.1 Clinician acceptance is strongly correlated with the ability to understand how a prediction was reached.

Explainable AI (XAI) techniques, such as SHAP (Shapley Additive Explanations) and PDP (Partial Dependency Plots), are essential for interpreting complex model outputs and providing actionable feedback.13 For example, in a study predicting prolonged ED wait times using XGBoost, XAI techniques identified specific external and internal factors driving the prediction.13 The top features influencing wait times were found to be the ED crowding condition and the patient mode of arrival.13 Providing this level of detail allows hospital administrators and clinical managers to transform a statistical prediction into an immediate operational intervention by addressing the root causal factors, such as resource deployment or patient routing.

Data Requirements and Workflow Integration

The reliability and generalizability of AI models are fundamentally dependent on the quality and volume of training data.6 These systems require comprehensive inputs from Electronic Health Records (EHRs), including historical triage records, demographics, lab results, imaging, and real-time monitoring devices.1

Data preparation is intensive, requiring careful preprocessing that involves normalizing structured variables and applying robust NLP techniques (tokenization, lemmatization, embedding) to unstructured clinical notes.1 Furthermore, stringent data de-identification procedures must be implemented to comply with regulatory standards such as the Health Insurance Portability and Accountability Act (HIPAA) or the General Data Protection Regulation (GDPR), ensuring patient privacy throughout the development cycle.1

Successful real-world implementation mandates seamless integration with existing clinical workflows, typically via EHR systems.1 The optimal integration involves two-way data syncing to minimize data entry errors and redundant administrative tasks, which also reduces administrative workload and clinician burnout.14 However, heterogeneous EHR systems and varying levels of technical support across institutions represent significant integration challenges that must be overcome through careful planning and teamwork among vendors, IT specialists, and health administrators.

Implementation Challenges, Governance, and Ethical Frameworks

Deployment in the ED—a high-stakes, time-sensitive environment—raises critical ethical and legal concerns that must be addressed through established frameworks.

Algorithmic Bias and Fairness

One of the most pressing ethical challenges is the risk of algorithmic bias. Since AI models learn from historical operational data, they risk codifying and exacerbating existing health inequalities or structural biases present in past patient treatment records.6 If training data disproportionately lacks information about certain demographic groups or reflects unequal treatment patterns, the resulting algorithm may provide skewed risk assessments, potentially leading to under-triage and compromised care for vulnerable populations.1 Mitigation requires establishing formal ethical frameworks, actively monitoring the model's output for differential performance across patient groups, and ensuring training datasets are representative and carefully curated to align with principles of fairness, dignity, and equity.

Transparency, Explainability, and Trust

The lack of transparency regarding AI decision-making processes poses a severe barrier to clinical adoption and patient acceptance.1 Clinicians must trust the decision support tool, particularly when AI algorithms may present "overconfident answers" or untraceable false positives, which introduce dangers to patients if relied upon without human skepticism.

Trust and acceptance are achieved through mandatory implementation of Explainable AI (XAI), ensuring that the reasoning behind a triage decision is interpretable by the clinician.6 A consistently reliable and understandable system supports daily acceptance, which is fundamental to achieving the operational benefits identified in successful case studies.1 Organizations must also form dedicated groups to continuously monitor AI results and ensure adherence to established ethical policies.

Regulatory and Legal Liability

The potential for sophisticated AI models, such as Large Language Models (LLMs), to generate errors, referred to as "hallucinations," or to demonstrate unreliable performance in rare or atypical presentations common in the ED, introduces significant liability concerns.7 The regulatory landscape is struggling to keep pace, creating a liability gap regarding accountability when AI-driven decisions lead to patient harm (e.g., misclassification resulting in delayed care).

To ensure patient safety, regulators like the U.S. Food and Drug Administration (FDA) monitor AI medical devices.14 Widespread adoption necessitates clear guidelines, emphasizing human oversight, and adherence to a "Human-in-the-loop" design.1 Clinicians must retain final decision-making authority, ensuring that the AI tool functions as a decision support system, rather than an autonomous decision maker, thereby providing a necessary safeguard against potentially biased or erroneous predictions.

Staff Training and Acceptance

Successful deployment requires overcoming resistance and ensuring competence among end-users. Proper training and effective teamwork among AI vendors, IT departments, and health administrators are crucial for smooth adoption and seamless integration with complex EHR systems.

Beyond standard software training, AI is transforming emergency medical education itself. AI-powered data-driven decision support systems assist trainees in real-time by suggesting differential diagnoses or prognostic information based on large datasets. Furthermore, AI is utilized within Virtual Reality (VR) simulations to create realistic environments where doctors can practice high-acuity scenarios and refine their triage skills without risk to actual patients. This educational integration ensures that future generations of emergency medicine professionals are prepared to leverage and trust these advanced decision support tools effectively.

Conclusions and Strategic Recommendations

Synthesis of Best Practices

The real-world implementation data demonstrates that AI ED triage is not a monolithic solution but rather a dual-pathway strategic intervention. The highest organizational value is currently realized through Demand Management—the preventative use of AI (e.g., NHS HIU/Health Navigator) to identify and proactively manage high-risk patients, achieving systemic benefits like a 34% reduction in A&E attendances. Concurrently, Acute Triage Optimization within the ED utilizes high-accuracy, XAI-enabled models (e.g., XGBoost, SERP) to enhance clinical outcomes, such as earlier sepsis detection , and operational efficiency, such as a 15% improvement in throughput.

The successful deployment of these systems requires moving beyond isolated performance metrics to focus on integration complexity and clinical utility. For instance, the analysis of technical implementation reveals a necessary priority shift: clinical relevance mandates optimizing models to minimize the False Negative Rate (FNR) above generalized accuracy, ensuring patient safety is the primary metric.

Policy and Governance Recommendations

Based on the observed implementation challenges, several governance actions are recommended for high-stakes acute care environments:

Adopt the Learning Health System (LHS) Framework: Traditional RCTs are often impractical for high-acuity AI tools that inherently alter clinical judgment. The LHS framework, as demonstrated in the SERP case, provides an ethically appropriate mechanism for continuous, iterative safety and efficacy evaluation during live deployment.

Mandate Explainable AI (XAI): Policy must require the integration of XAI techniques (SHAP, PDP) to ensure transparency and build necessary clinician trust, transforming "black box" outputs into auditable, actionable clinical intelligence.

Establish Clear Liability Frameworks: Regulatory bodies must develop clear guidelines addressing the liability and accountability for AI-driven decisions, especially concerning potential errors (hallucinations) in complex cases. This clarity is essential for accelerating responsible adoption across health systems.

Future Research Trajectory

To ensure the maturity and scalability of AI ED triage, future research must prioritize rigorous external validation across multi-institutional and geographically diverse datasets to ensure the generalizability of predictive models. Furthermore, targeted research is necessary to investigate AI performance specifically in rare, low-frequency, or atypical presentations common in emergency medicine, where current models and large language models may present significant limitations or biases that disproportionately affect vulnerable patient groups.