AI Health Triage Integration: Interoperability and Governance Challenges in EHR Environments

The integration of Artificial Intelligence (AI) health triage systems into the foundational infrastructure of Electronic Health Records (EHRs) represents a critical evolution in healthcare delivery. Primary care practices are currently grappling with escalating patient demand, resource scarcity, and the ongoing difficulty of efficiently routing individuals to the appropriate level of care.

The integration of Artificial Intelligence (AI) health triage systems into the foundational infrastructure of Electronic Health Records (EHRs) represents a critical evolution in healthcare delivery. Primary care practices are currently grappling with escalating patient demand, resource scarcity, and the ongoing difficulty of efficiently routing individuals to the appropriate level of care.1 AI triage is positioned not merely as an efficiency tool, but as a mechanism necessary for optimizing patient flow, enhancing resource utilization, and fundamentally improving patient safety across the care continuum.

The Clinical and Operational Value Proposition

AI triage systems utilize intelligent algorithms trained on extensive medical knowledge bases to rapidly assess a patient’s symptoms and medical history, guiding them to the most suitable clinical pathway.1 These platforms move beyond reliance solely on human receptionists or nurses for initial intake, offering a powerful decision-support layer.1

The core functionality involves gathering detailed symptom information, often allowing patients to input details in their own words, followed by rapid data analysis.1 Based on this analysis, the system suggests an urgency level and recommends the optimal next step, which may range from providing self-care advice or scheduling a telemedicine consultation to arranging an in-person general practitioner (GP) appointment, referring to ancillary specialists (such as pharmacists or mental health professionals), or directing the patient immediately to an emergency department (ED).1 This proactive routing ensures optimal resource allocation, reducing the utilization of high-cost services, particularly unnecessary visits to the emergency room.2 Furthermore, the continuous flow of data collected by AI triage systems yields valuable, data-driven insights into population health, revealing patterns in patient demand, common symptom presentations, and current resource usage, thereby informing future practice planning and population health management strategies.1

Quantifiable Evidence of Clinical Superiority and Safety

The justification for widespread AI adoption rests on demonstrable, measurable improvements in triage accuracy and clinical safety. These systems are shifting AI from an auxiliary administrative tool to a safety-critical component of patient intake.

Recent clinical research demonstrates a substantial increase in assessment accuracy when utilizing AI. Studies reveal that AI-driven triage systems achieve accuracy rates of $75.7\%$, which represents a significant $26.9\%$ improvement compared to traditional nurse-led triage accuracy rates of $59.8\%$.3 This improvement is crucial, especially in high-stakes environments. The integration of such systems directly reduces the rate of critical patient mis-triage, lowering it from $1.2\%$ to just $0.9\%$. This reduction directly correlates with the potential for saving lives through faster, more precise emergency care pathways.3

The statistically superior accuracy and the quantifiable reduction in critical mis-triage establish a new efficacy benchmark in clinical care.3 The existence of technology that demonstrably mitigates the risk of patient harm by providing a more accurate assessment baseline suggests that the continuous exclusive reliance on traditional human triage methods may expose health systems to potential liability risks for preventable mis-triage events. The adoption of AI triage, therefore, transcends mere operational efficiency; it is rapidly becoming a mandated requirement for maintaining a high standard of care. This conclusion is reinforced by the maturity of leading AI platforms, such as Infermedica, which have attained Medical Device Regulatory (MDR) Class IIb certification.4 Such validation confirms the systems' clinical safety and performance, demonstrating that their output matches or closely approximates human clinicians in safety benchmarks.5 This regulatory classification confirms that the AI triage module must be integrated and governed not as a simple software add-on, but as a regulated, safety-critical Clinical Decision Support System (CDSS).4

Technical Architecture: EHR Integration and the Data Conduit

The clinical efficacy demonstrated by AI triage systems is directly dependent on their ability to perform seamless, real-time, bidirectional data exchange with core EHR platforms. This requirement necessitates adherence to modern interoperability standards.

The Foundational Role of FHIR in Clinical Decision Support

Fast Healthcare Interoperability Resources (FHIR) is globally recognized as the standard essential for achieving the required real-time integration speed, data structure, and semantic compatibility between disparate health systems.6 FHIR represents the most recent version of Health Level Seven (HL7) standards, leveraging common web protocols such as RESTful APIs, XML, JSON, and HTTP.6 This foundation makes FHIR significantly easier to implement and learn than earlier versions, such as HL7 V2 or V3, providing inherent interoperability.6

The interoperability framework provided by FHIR is critical for integrating CDSS tools.7 It enables real-time access to patient data, allowing AI algorithms to swiftly fetch comprehensive patient context—including medical history, allergies, and current medications—directly from the EHR.6 The FHIR REST API is crucial, allowing data consumers to request specific information on demand, enabling the real-time server communication necessary to ensure the triage model’s recommendations are based on the most up-to-date patient record.6

Technical Implementation Strategy and Workflow

Integrating a third-party AI triage system, especially with entrenched platforms like Epic or Cerner, demands a disciplined and phased technical strategy to ensure data integrity and system stability.8

The integration requires a sophisticated bidirectional communication flow. The AI module must query the EHR using specific FHIR Resources (e.g., resources for Patient, Condition, or MedicationRequest) to gather historical data that informs the initial risk assessment. Subsequently, the system must write back critical outputs—such as the calculated triage risk score, the final recommendation, and the suggested next steps—into the EHR as structured, clinician-reviewable data.1

Deployment itself must follow a staged rollout methodology. This involves deploying the integration in controlled phases, initially targeting a small user segment before proceeding to full-scale release.8 This strategy must be underpinned by robust infrastructure, including established failover mechanisms, comprehensive disaster recovery plans to prevent data loss, and stringent version control to manage code changes, rollbacks, and continuous improvements to the AI model.8 Maintaining real-time clinical responsiveness requires continuous tracking of performance metrics, including API latency, request success rates, and overall system throughput, especially since predictive modeling algorithms rely on instantaneous data processing to detect and flag high-risk events.

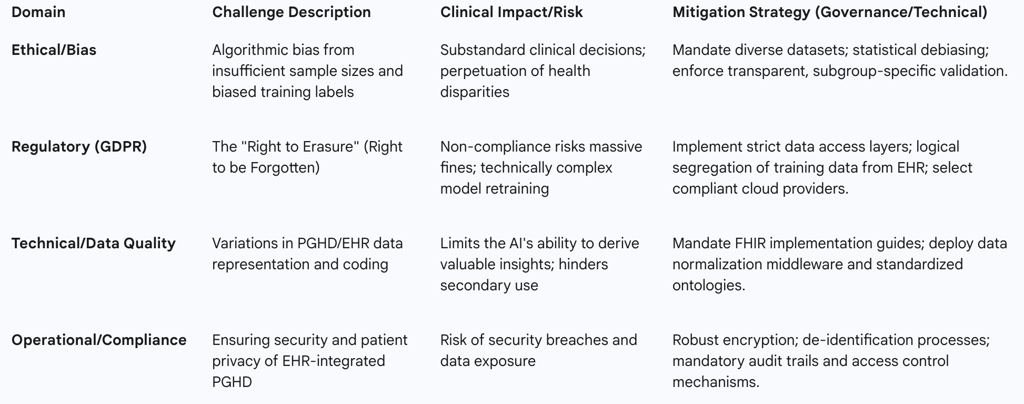

Interoperability Gaps: PGHD and Variational Coding

While FHIR provides robust standardization for structured clinical data (e.g., diagnosis codes, lab results), significant technical challenges persist in accommodating non-traditional data sources and ensuring semantic uniformity across systems.9

A critical gap is presented by Person-Generated Health Data (PGHD). This includes self-reported symptoms input directly into the AI triage system, as well as data gathered from wearable devices.1 The lack of standardized terminology and format for PGHD limits the completeness and normalization of the integrated data.9 This data heterogeneity and variational coding constrain the AI system's ability to draw comprehensive, valuable clinical insights.

The constraint imposed by non-standardized PGHD creates a measurable data quality bottleneck. Since AI triage depends heavily on patient input—often captured as unstructured text detailing symptoms—the system’s overall predictive accuracy may be compromised in real-world settings compared to validation studies based purely on highly structured EHR records.1 Therefore, the health system must account for a separate technical requirement: developing a normalization layer to process, standardize, and code PGHD into reliable FHIR resources before it reaches the AI model. Furthermore, the reliance on high-volume, low-latency data exchange via FHIR RESTful APIs mandates a strategic investment in robust, commercial-grade API management and middleware solutions.6 This infrastructure is vital not just for the AI model itself, but for securing, scaling, and monitoring the millions of daily API calls between the EHR backbone and the integrated AI module.

Navigating the EHR Vendor Landscape

Interoperability in the clinical environment is heavily influenced by the proprietary structures and strategic data exchange philosophies of the dominant EHR vendors. Integration of AI triage solutions must navigate these established ecosystems.

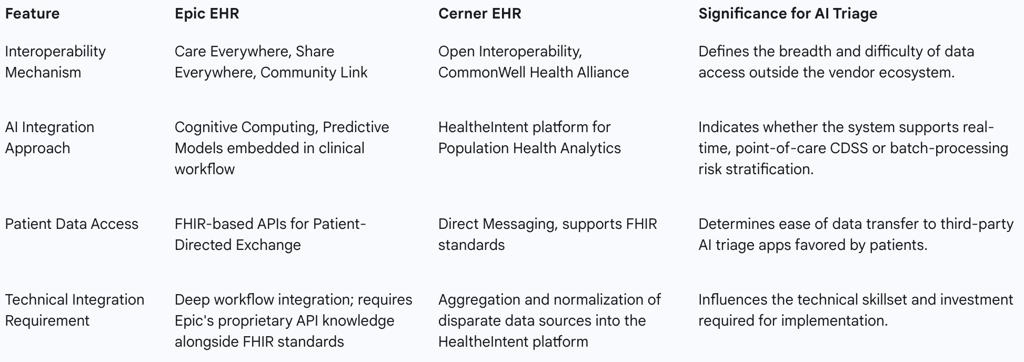

Epic's Integrated Strategy and FHIR Adoption

Epic, a prevalent EHR platform in large U.S. health systems, promotes a unified and highly interconnected approach to patient record management. Epic facilitates extensive data sharing across organizations via Care Everywhere, which handles the exchange of over 20 million patient records daily, with roughly half of these exchanges occurring with organizations using different interoperable EHRs.10

For AI integration, Epic utilizes Epic Cognitive Computing, which focuses on embedding predictive models directly into existing clinical workflows.11 Tools like SlicerDicer are employed for real-time analytics, assisting in gaining operational and clinical insights.11 Importantly, Epic supports patient autonomy through Patient-Directed Exchange, leveraging FHIR-based APIs to allow patients to securely transmit their electronic health information to third-party applications of their choosing.10 This feature is vital for supporting external AI triage solutions favored by patients.

Cerner's Open Platform and Population Health Focus

Cerner (now Oracle Health) has historically emphasized a strategy built on open interoperability and cross-platform data exchange, often focusing on broad data aggregation for population health initiatives.11

Cerner supports broad interoperability through participation in initiatives such as the CommonWell Health Alliance and utilizes Direct Messaging for secure communications between healthcare providers.11 The company centers its AI approach on population health and predictive analytics, employing the HealtheIntent platform to gather and synthesize data from various disparate sources. This comprehensive data aggregation supports proactive management of chronic diseases and aids public health efforts.11

Strategic Integration Models

The differences in vendor strategies dictate distinct AI adoption pathways. A successful AI integration strategy must recognize whether the goal is immediate, point-of-care clinical decision support (CDSS), which aligns more closely with Epic’s integrated workflow approach, or long-term population health risk stratification, which aligns more closely with Cerner’s platform approach.11

Integrating AI with either vendor necessitates specialized technical techniques, including the use of advanced APIs, HL7, FHIR, RESTful protocols, and middleware.8 These elements collectively construct a resilient conduit for the continuous flow of medical information between the AI module and the core EHR data sets.8 The primary integration objective must remain centered on enhancing clinical workflow efficiency, which includes reducing administrative errors and accelerating precision-driven analytics, such as AI capabilities that swiftly analyze vast datasets to detect risks like sepsis.8

While FHIR sets the necessary data exchange standards, the reliance on proprietary mechanisms such as Care Everywhere confirms that a seamless AI integration often requires a hybrid model. This model must leverage both standard FHIR endpoints and specialized, vendor-specific APIs and protocols, increasing the complexity and maintenance overhead for internal IT teams.

Table 2: Comparison of Major EHR Vendor Interoperability for AI Integration

Interoperability Challenge I: Addressing Data Integrity and Algorithmic Bias

The single greatest ethical and clinical risk associated with integrating AI triage into EHRs is the unintentional codification and perpetuation of health disparities through algorithmic bias. Left unchecked, biased medical AI can lead to substandard clinical decisions and exacerbate long-standing systemic issues.12

Sources and Propagation of Bias

Algorithmic bias is a systemic problem that compounds across the entire AI development and deployment lifecycle.12 This bias originates primarily in the training data, often due to insufficient sample sizes for specific patient groups, which results in suboptimal performance and clinically unmeaningful predictions for those subgroups.

A significant concern is the quality of the labels used to train supervised learning models. These labels, often derived from historical patient records, may inadvertently reflect existing implicit cognitive biases or even codify substandard historical care practices.12 For example, if a health system historically provided less aggressive triage or referral rates to a particular demographic, the AI will learn that lower rate as the "correct" outcome, thereby automating and scaling biased resource allocation.

Compounding this issue is the presence of missing data. Data features that are not routinely captured, such as Social Determinants of Health (SDOH), or data that is non-randomly missing, such as diagnosis codes for specific populations, introduce bias because the AI lacks key factors influencing real-world health outcomes.12 When applied clinically, biased AI can perpetuate and exacerbate serious healthcare disparities, failing to account for outcome differences such as the higher overall mortality rate observed in non-Hispanic Black patients compared to non-Hispanic white patients.

The Deterioration of Model Performance Post-Deployment

AI models validated in closed development environments frequently exhibit performance degradation when exposed to the variability and complexity of real-world clinical data. Critically, this degradation is rarely uniform.12

When models are deployed to patient populations outside their original training cohort, performance can deteriorate, and this failure can occur differentially across subgroups.12 This differential deterioration results in higher mis-triage rates for specific racial or ethnic minorities, undermining the model’s overall clinical utility. Reliance solely on aggregate performance metrics (e.g., the reported $75.7\%$ overall accuracy) may obscure this subgroup-specific bias, creating a false sense of security regarding the model’s safety profile.12 End-user interaction with deployed solutions can also introduce bias, further compromising outcomes.12

Mitigation Frameworks and Governance

Mitigating algorithmic bias requires a robust, proactive governance framework implemented across the entire model lifecycle. First and foremost, mitigation requires a data diversity mandate, ensuring the collection of large, diverse, and representative datasets, and actively ensuring that underrepresented patient groups are appropriately sampled.

Furthermore, health systems must emphasize the use of Explainable AI (XAI).1 XAI provides necessary transparency, not only building trust among patients and clinicians but also allowing auditors and clinical staff to understand the causal factors behind a triage decision, thereby enabling the identification and correction of biased pathways.12 This structural requirement for transparency must be paired with thorough model evaluation mandates, emphasizing subgroup-specific analyses and enforcing standardized bias reporting and transparency requirements prior to any real-world clinical implementation.12 This holistic approach elevates the role of clinical informatics into a domain encompassing clinical ethics and public health, necessitating that AI governance bodies include specialists in these fields, alongside technical developers, to scrutinize the socio-technical implications of every triage recommendation.

Interoperability Challenge II: Regulatory Compliance and Cross-Jurisdictional Risks

The integration of AI, particularly conversational AI agents and Large Language Models (LLMs) often hosted in cloud environments, introduces acute legal and regulatory friction when operating across international boundaries, requiring careful navigation of HIPAA and GDPR compliance mandates.

The HIPAA Framework (U.S.)

The Health Insurance Portability and Accountability Act (HIPAA) establishes the foundational requirements for the security and privacy of Protected Health Information (PHI) in the United States. HIPAA focuses heavily on ensuring accountability and strict access control.

Under HIPAA, organizations must maintain robust mechanisms to control and identify authorized access to medical records, mandating unique user IDs, secure passwords, and rigorous audit trails that track every user activity, including data access or modification.14 This focus is inherently on record permanence and accountability, which is vital for continuity of care and legal compliance. Consequently, HIPAA, unlike international regulations, does not grant patients the full “right to be forgotten”.15 Patients may request to see and correct their PHI, but they cannot demand its complete erasure.

The GDPR Framework (E.U.): The Right to Erasure Conflict

The European Union's General Data Protection Regulation (GDPR) imposes significantly stricter constraints on data handling, particularly concerning patient autonomy and the control of personal data.16

GDPR grants EU residents extensive control over their PHI, including the Right to Access, the Right to Rectification, and critically, the Right to Erasure, commonly known as the "right to be forgotten".17 This mandate is technically formidable for AI systems, particularly those trained on vast datasets. Personal data can be deeply embedded within model weights, training datasets, or cached responses, making complete deletion difficult or, in some cases, impossible without costly and complex model retraining.16 Compliance requires organizations handling data of EU patients to delete all personal data upon request from raw databases and all hidden locations utilized by the AI, requiring constant, real-time verification of user consent and status.16 Non-compliance with GDPR’s strict deadlines risks imposing massive financial penalties.

The fundamental conflict between HIPAA's requirement for record immutability and accountability and GDPR's demand for data transience and erasure necessitates a highly disciplined architectural solution: data segregation. Global health systems cannot use a unified data store for AI training and auditable clinical records. The raw AI training data, which must be mutable to satisfy GDPR erasure requests, must be logically and physically separated from the immutable, auditable EHR record required by HIPAA and clinical necessity. This mandates creating strict data access layers that limit visibility of sensitive details while allowing checks on data availability.

Cloud Deployment and Data Residency Risk

The operational trend toward utilizing cloud-based LLMs for advanced conversational triage introduces complex compliance layers. Standard cloud LLM providers often fail to meet the stringent data residency or confidentiality requirements demanded by healthcare regulations.16 Healthcare organizations operating with cross-jurisdictional compliance mandates must select specialized cloud providers that offer region-specific data centers and necessary compliance certifications, though these options may be higher-cost and offer fewer features than global services.

Moreover, the integration of non-standardized PGHD increases the overall security risk. Without proper standardization and robust security protocols for this data, EHR-integrated PGHD is highly vulnerable to security breaches that could compromise data integrity and expose PHI.9 To operationally enforce GDPR's requirements in cloud-based AI, organizations must move towards Privacy-Preserving AI (PPAI) techniques, such as federated learning or differential privacy, to train models without retaining direct links to identifiable data, thereby minimizing the exposure of PII embedded within the model itself.

Table 3: Critical Interoperability Challenges and Mitigation Strategies

Governing AI: A Unified Framework for Responsible Deployment

The successful, safe, and ethical integration of AI health triage systems demands the implementation of robust, proactive, and centralized AI governance. In the absence of comprehensive external regulation for medical AI, healthcare organizations must implement internal systems of oversight.

The Mandate for Unified AI Governance

AI governance is defined as a system of rules, practices, processes, and technological tools designed to ensure that AI solutions are transparent, equitable, and aligned with core ethical and regulatory standards. This structure serves to mitigate risks, safeguard patient safety, and support the responsible integration of AI into clinical practice. Industry leaders and clinical stakeholders express a strong, unified desire for a centralized approach to AI governance, ensuring consistent application of policy and risk management across all diverse AI initiatives within the organization. This centralization acts as the necessary counterbalance to the technical complexity of integrating various AI vendors and ensures a single point of authority to enforce standards (FHIR protocols, bias audits, and compliance) before the AI interacts with the clinical workflow.

Pillars of Governance: Transparency, Equity, and Accountability

Effective governance must be structured around core pillars that uphold patient safety and ethical practice. A key requirement is establishing stringent audit trails and accountability mechanisms. This includes ensuring that only authorized personnel can access and assess audit trails, which must track every system decision and user activity (who accessed or modified a record), providing the necessary legal and clinical accountability.

Transparency must be enforced by mandating the use of Explainable AI models (XAI) wherever clinically feasible. Furthermore, governance bodies must address health equity proactively by mandating standardized data requirements. This includes the implementation of a universal patient identifier, which is crucial for improving the representation of underserved patients—who are typically underrepresented in health information systems (HIS) and often switch providers—thereby enhancing the system's ability to address health inequities.

A critical area where governance must intervene is data quality, particularly the completeness of outcome data. The current system exhibits inadequacy in collecting fundamental data, such as standardized mortality tracking, as fatal outcomes are often not incorporated into the medical record unless death occurs during hospitalization. If the AI is trained on data lacking standardized, definitive outcomes (i.e., death), its ability to accurately and safely stratify the highest-risk conditions is fundamentally limited, undermining its maximum safety potential (reducing mis-triage rates). Governance must enforce improved outcome data collection standards across the EHR.

Operationalizing Risk Mitigation

AI governance must translate into specific, non-negotiable operational protocols for managing data flow and vendor relationships. IT teams must establish clear, secure processes for extracting data from the EHR, sharing it with vendors to facilitate model adaptation and evaluation under appropriate contractual agreements, and securely reintegrating the results back into the EHR system.

This operational framework requires rigorous oversight of data de-identification and subsequent re-identification processes. These safeguards are essential to ensure that vendors can securely access the necessary data for model training and validation while maintaining patient confidentiality and mitigating risk. The adherence to these operational mandates ensures that the technical promise of AI triage is delivered responsibly and equitably, aligned with both clinical necessity and regulatory requirements.