Agentic AI in Triage: Are We Ready for Autonomous Prioritization in 2025

Agentic Artificial Intelligence (AI) systems represent a fundamental functional progression beyond the current generation of integrated AI in healthcare, shifting from passive clinical decision support (CDS) toward dynamic, coordinated, and autonomous action.

Agentic Artificial Intelligence (AI) systems represent a fundamental functional progression beyond the current generation of integrated AI in healthcare, shifting from passive clinical decision support (CDS) toward dynamic, coordinated, and autonomous action. This technological leap holds profound promise for complex logistical challenges such as revenue cycle management, care pathway optimization, and population health management, where the system can autonomously coordinate outreach and monitor outcomes across large patient cohorts.

However, when evaluating the readiness for deploying Agentic AI systems capable of fully autonomous patient prioritization (triage) within the immediate operational timeline of 2025, the overall verdict is highly cautious. While the technical frameworks for building such autonomous systems exist , the readiness level for safe, ethical, and legally compliant deployment in acute, life-critical environments like the Emergency Department (ED) is critically low. Fully autonomous clinical application, particularly in high-risk areas like triage, remains largely experimental and lacks the requisite trust and regulatory backing necessary for routine use.

The central contradiction in the 2025 landscape is that Agentic AI is technically capable of reasoning across domains and executing complex, multi-step interventions. Yet, the legal and ethical infrastructure required to manage the consequences of system failure in an autonomous triage setting is nascent and unprepared. This unpreparedness creates an immediate legal and financial impasse for adoption, as the allocation of liability for misprioritization resulting in patient harm is fundamentally unresolved.

Therefore, the strategic stance for health systems should be highly conservative. Organizations are strongly advised to focus resources through 2027 on integrated augmentation models—hybrid approaches where Agentic frameworks enhance coordination and prediction under strict human supervision. This period must be used to rigorously develop the comprehensive governance structures, establish real-time algorithmovigilance programs, and advocate for clear legal frameworks necessary before attempting deployment of Level 5 autonomy in acute patient prioritization.

Agentic AI in Healthcare: A Fundamental Paradigm Shift

To accurately assess readiness, it is imperative to establish a precise technical and functional distinction between the AI systems currently succeeding in healthcare and the nascent concept of Agentic AI.

Defining Autonomy in Clinical Contexts

Traditional AI applications, which constitute the majority of current success stories, are characterized by their focus on specific, high-impact workflow improvements. Examples include specialized deep learning networks that reduce radiologist reading time, or CDS tools that flag critical conditions in the EHR. These systems, like the Mednition KATE™ model utilized in some health systems, function by analyzing symptoms against historical records to immediately provide information about the appropriate level of care, thereby assisting care teams. Crucially, in these traditional, integrated systems, the human caregiver remains the ultimate decision maker.

Agentic AI, conversely, represents a fundamentally different operational approach. These systems are designed with the capability for self-directed action, reasoning across multiple healthcare domains, planning multi-step interventions, and executing coordinated responses autonomously. The system shifts its role from providing a passive prediction to actively managing an entire process. For instance, in population health, an Agentic system would not just identify at-risk patients; it would autonomously coordinate outreach, schedule interventions, and monitor outcomes across the entire population, adapting its strategy based on real-time feedback.

Currently, fully autonomous AI capable of clinical decision-making and execution remains largely experimental. While elements of agentic capability, such as real-time adaptability, are gradually being introduced under human supervision in areas like robotic-assisted surgery , integrated AI that supports human expertise is still the prevailing standard. However, the demonstrated statistical superiority of these models, such as KATE™ achieving a 75.7% accuracy rate in predicting ESI acuity assignments compared to an average triage nurse's 59.8% , confirms the potential clinical value in leveraging these advanced predictive capabilities.

Architectural Blueprint of Agentic Triage Systems

The complexity of acute triage—rapidly assessing life-threatening conditions, allocating scarce resources, and coordinating care—demands a sophisticated architecture that far exceeds standard predictive models. Agentic triage systems must operate through continuous, self-monitoring execution loops, often powered by Large Language Models (LLMs) that integrate and synthesize multimodal patient data.

Components of the Agentic Loop

The functionality of an autonomous agent is cyclical and iterative. This loop includes:

Perception: The agent begins by collecting data from the chaotic environment of the ED, utilizing sensors, APIs, databases, and dynamic interactions with the Electronic Health Record (EHR). For an Agentic triage system, this involves ingesting a complex mix of unstructured (symptom descriptions) and structured data (vitals, lab results).

Reasoning and Data Processing: Collected data is processed to extract meaningful insights, filter out ambient noise inherent in clinical settings, and interpret the data within the immediate clinical context.

Goal Setting and Plan Generation: The system sets objectives (e.g., maintain patient safety, optimize bed occupancy, minimize wait times) and generates executable plans—sequences of actions—to achieve these goals, factoring in existing resource constraints.

Decision-Making and Execution: The AI evaluates potential actions and chooses the optimal strategy based on factors like predicted outcomes, efficiency, and accuracy. For autonomous triage, execution means actively assigning the Emergency Severity Index (ESI) level, ordering initial diagnostics, and directing the patient to a specific physical location or resource.

Learning and Adaptation: The agent continuously refines its performance based on outcomes and environmental feedback.

Orchestration: Crucial for complex environments, orchestration is the mechanism that manages multiple specialized AI agents working together as a "crew". This complexity is often managed by specialized agentic frameworks, such as CrewAI, Agno, or LangGraph, which provide defined parameters and protocols for agent communication, conflict avoidance, and scale.

The fundamental difference between integrated AI and Agentic AI deployment in triage is that the latter requires the system to reliably execute this entire loop—from perception through execution—in a safety-critical environment without immediate human intervention.

The Triage Environment and Clinical Performance Benchmarks

The rationale for pursuing autonomous triage stems directly from the inherent limitations of human performance in high-stress, unpredictable environments and the proven efficacy of integrated AI in prediction.

The High-Stakes Nature of Emergency Department (ED) Triage

The Emergency Department is characterized by extreme complexity, volatility, and numerous distractions. Triage nurses are tasked with rapidly assessing, identifying, and prioritizing patients who require urgent, life-saving interventions. This task demands extremely high levels of cognitive function and situational awareness (SA).

Studies from safety-critical fields highlight that SA is vital for performance in time-sensitive situations. However, research within EDs indicates that human performance is often degraded by environmental factors. High workload and stress significantly reduce the perception of patient cues, leading to a cognitive trade-off between perception (data gathering) and comprehension (understanding the data). An observational study assessing real-time triaging processes revealed that only 10% of participating nurses achieved a good SA score. This documented deficiency in human situational awareness under pressure is a powerful argument for leveraging AI augmentation and, ultimately, autonomy, to introduce a more consistent and robust decision layer.

Performance of Integrated Triage Systems (Pre-Agentic Successes)

Before autonomous agents can be deployed, they must be benchmarked against the established performance of existing predictive tools. Current integrated machine learning (ML) models have already demonstrated high accuracy in specific triage functions.

For instance, the KATE™ model, which analyzed data from over 166,000 ED patient encounters, showed a 75.7% accuracy rate in predicting ESI acuity assignments. This was statistically superior to the accuracy achieved by the average triage nurse (59.8%) and individual study clinicians (75.3%). Similarly, large-scale systems such as the Babylon Triage and Diagnostic System demonstrated clinical accuracy and safety in producing differential diagnoses and triage recommendations comparable to, and occasionally exceeding, human doctors. On average, the Babylon AI was found to give safer triage recommendations than the doctors, though sometimes at the expense of slightly lower appropriateness.

These findings confirm that the predictive component of triage—assessing risk and predicting acuity level—is technically mature and can often outperform human assessors, validating the theoretical foundation for advanced AI integration.

The Threshold from Prediction to Autonomy

A crucial distinction must be made between highly accurate prediction and guaranteed autonomous execution. While current integrated AI systems have demonstrated statistical superiority in predicting risk under controlled or retrospective conditions, they remain Clinical Decision Support Systems (CDSSs). Their success relies on a human in the loop who maintains ultimate accountability.

The transition to a fully Agentic system for autonomous prioritization represents a profound functional shift that introduces novel risks. An autonomous triage system must not only accurately predict the required ESI level, but it must also flawlessly execute a multi-step intervention—such as directing resources, automatically ordering preparatory procedures, and coordinating staff allocation—in a chaotic environment. The system’s success, therefore, is contingent not just on its ability to handle complex data synthesis, but on its capacity for robust action execution and error recovery.

The transition from a predictive tool to an autonomous prioritization engine fundamentally shifts the source of potential harm and, critically, the framework of legal liability. When traditional AI provides data, the human decision maker is liable for negligence of oversight. When an autonomous agent executes a multi-step plan that results in misprioritization and patient harm, the harm arises directly from system failure. This forces legal systems to treat the issue not as medical malpractice by a physician, but as product liability against the developer or the hospital system deploying the technology. This unresolved legal transformation acts as an immediate and formidable barrier to widespread autonomous deployment in 2025.

Furthermore, while Agentic AI theoretically solves the critical human vulnerability of poor Situational Awareness (SA) in the ED by offering robust, coordinated reasoning capabilities , the implementation of these multi-agent frameworks introduces new, complex technical frailties. These technical vulnerabilities, detailed below, challenge the system's ability to maintain the very situational consistency it seeks to provide.

Critical Barriers to 2025 Deployment: Technical and Operational Maturity

The shift to autonomous prioritization requires multi-agent systems to operate with faultless reliability, a standard that current frameworks struggle to meet in safety-critical domains.

Robustness, Resilience, and Error Handling

Agentic AI systems rely on the orchestration of multiple specialized agents working together. This multi-agent architecture introduces inherent fragility that is magnified in a high-stakes clinical setting.

One primary technical hurdle is the Distributed State Management Challenge. When multiple agents operate in parallel—for example, one agent assessing cardiac risk while another assesses resource availability—they must maintain a consistent, synchronized understanding of the patient and the environment. Synchronization problems frequently lead to divergent state representations, meaning different agents operate under different, conflicting assumptions. This conflict can be exacerbated by training misalignment, where agents trained on different datasets or with different objectives develop inconsistent knowledge representations, creating friction during collaboration.

A direct consequence of this fragility is Error Propagation. In a monolithic system, errors usually trigger immediate, isolated exceptions. In a multi-agent environment, however, a failure in one component (e.g., a wrong agent generating a false patient risk score or incorrect code) is passed as bad data to subsequent agents, cascading the mistake throughout the entire workflow. If the triage master agent receives an erroneously low priority score, it executes a suboptimal plan, resulting in patient harm or costly mistakes. The goal of robust system design is not just to minimize failure, but to implement mechanisms that allow the system to identify, track, and recover from coordination failures when they occur.

Adding to the complexity is the persistent problem of False Positives. Security and clinical safety systems, by design, often err on the side of caution, generating a large volume of alerts that flag issues as critical when they are irrelevant or non-exploitable. In a clinical context, this high volume of irrelevant alerts contributes directly to alert fatigue among clinical staff. This systemic failure—the technical inability to reliably suppress false alerts—undermines the entire safety benefit of the human-in-the-loop fallback mechanism. Overworked clinicians, fatigued by noise, become more likely to disregard warnings, inadvertently allowing critical, true positives to be missed. The failure mode, therefore, is not purely technical but socio-technical, jeopardizing patient safety through the exhaustion of human attention.

Real-Time Reasoning, Data Synthesis, and Tool Integration

Autonomous triage demands superior computational situational awareness (SA). The system must rapidly synthesize multimodal data and execute complex decisions.

Achieving clinical SA requires the Agentic AI, often powered by LLMs, to dynamically interact with external clinical tools and data sources. For example, in a cardiology triage workflow, the master agent must orchestrate several steps: an interactive agent guides registration, AI Builder extracts relevant health metrics (like troponin levels and ECG values) from reports, and the data is validated and stored in the EHR in real time. Only then does the Power Automate flow trigger the triage master agent to start the evaluation.

This reliance on API integration means the entire autonomous workflow is only as strong as its weakest link. The failure or lag of any single tool integration—such as a processing model failing to extract troponin levels correctly—becomes a critical failure point for the entire autonomous decision cycle.

Furthermore, given the requirement for real-time recovery from failure, a high level of Explainable AI (XAI) is mandatory. XAI ensures transparency, allowing developers and regulators to understand the internal mechanisms of the algorithm and trace the causal path of a decision. For a system that autonomously decides patient priority, transparency means the AI must "show its homework," detailing the input data and the reason behind its conclusion. Without this level of interpretability, diagnosing a system failure and ensuring accountability after a coordination error is practically impossible.

Legal and Regulatory Readiness: The Liability Landscape

The most significant bottleneck for autonomous triage deployment in 2025 is the absence of established legal precedent and comprehensive regulatory frameworks designed for autonomous clinical systems.

Classification and Regulatory Oversight

Globally, autonomous triage systems are already facing stringent oversight. Under the framework of the European Union (EU) AI Act (AIA), any AI system used for diagnosis, monitoring physiological processes, or treatment decision-making is immediately classified as High-Risk AI. Because autonomous triage affects resource allocation and patient safety pathways, it falls under this classification.

This high-risk designation mandates severe compliance requirements, including rigorous third-party conformity assessments and the establishment of robust, continuous monitoring systems. For manufacturers (or providers, as they are termed under the AIA), navigating the joint application of the AIA and existing Medical Devices Regulation (MDR) represents a substantial compliance burden.

In the United States, the FDA regulates AI/ML technologies as Software as a Medical Device (SaMD). While the FDA has approved fully autonomous diagnostic systems for specific tasks, such as IDx-DR for diabetic retinopathy, the complexity of an autonomous triage system—which involves dynamic, continuous decision-making influencing real-time resource allocation—requires careful management throughout the entire medical product life cycle to ensure safety and performance. The speed of adoption for these disruptive technologies is currently complicating health system readiness for routine use.

The Malpractice Frontier and Liability Allocation

The liability landscape is characterized by a significant vacuum. The adoption of sophisticated AI tools is currently growing faster than the legal frameworks required to govern them. So far, there has been limited documentation of malpractice cases in the U.S. where AI was the central claim, yet the looming question of accountability remains unresolved: Should liability fall on health systems, AI developers, or the physicians themselves?

The core challenge revolves around redefining the professional standard of care. A physician now faces an untenable balancing act: failing to use an advanced, statistically superior AI tool could be considered negligent by peers, yet relying too heavily on an autonomous system may be deemed careless, especially if the system malfunctions. The definition of the "reasonable physician" is actively being reshaped by this technology.

For fully autonomous systems—those making decisions that the human user cannot practically control—a strong consensus is emerging that liability must shift away from the practicing clinician. The American Medical Association (AMA) policy asserts that developers of autonomous AI systems are in the best position to manage the risks arising directly from system failure or misdiagnosis. Therefore, the developer must accept this liability, including maintaining appropriate medical liability insurance. This position acknowledges the parallel to autonomous driving technology, where vehicle manufacturers have faced product liability claims when their autonomous systems malfunctioned. Without this defined legal separation of risk, clinicians are unlikely to embrace autonomous triage.

Furthermore, institutional clarity is required regarding transparency. To promote safety and accountability, the AMA mandates that AI systems subject to non-disclosure agreements (gag clauses) that prevent disclosure of flaws or patient harm must not be covered or paid for, and the party enforcing the gag clause assumes all applicable liability. Until policy options such as special adjudication systems, insurance reform, or clear alteration of the standard of care are implemented to balance safety and innovation , the liability barrier remains an existential threat to autonomous triage adoption.

Ethical Governance and Human Factors: Trust and Equity

Autonomous prioritization in a critical care setting demands uncompromising ethical governance, particularly regarding algorithmic equity and human trust.

The Crisis of Algorithmic Bias in Triage Prioritization

AI systems are not objective arbiters; they are mirrors reflecting the historical biases, flaws, and inequalities embedded in the data they are trained upon. The consequences of this can be devastating in triage.

Training data sets frequently exhibit systemic flaws: they often overrepresent non-Hispanic Caucasian patients, contain non-randomly missing data for low socioeconomic patients, and lack crucial information like Social Determinants of Health (SDoH) data. The lack of data on ethnicity, race, and disability in national health databases compounds this problem, limiting the AI’s ability to generalize across diverse populations.

This biased training leads directly to prioritization inequality. For example, some AI systems have been shown to prioritize healthier white patients over sicker black patients for specialized care management, simply because the model was trained on historical cost data rather than true clinical need. Similarly, if an AI model is trained on non-randomly missing patient data, it may systematically assign a lower risk score to marginalized patients who historically have fewer documented healthcare interactions. This systematic under-prioritization means the AI may fail to qualify these sicker patients for priority bed allocation, effectively institutionalizing health disparities through technology.

The failure to achieve data equity is thus not merely a technical or data quality challenge; it is a structural ethical failure that guarantees the Agentic AI will violate the WHO principle of ensuring inclusiveness and equity. Autonomous prioritization built on biased data dictates continuous, mandated health inequity. To counteract this, experts are advocating for the implementation of Algorithmovigilance. This continuous monitoring process is ethically required to evaluate, track, and prevent adverse effects of algorithms in healthcare, ensuring the system’s performance does not degrade or reinforce biases as it encounters new, diverse data and clinical settings.

The Imperative of Transparency (XAI) and Accountability

Ensuring the ethical operation of autonomous agents relies on high levels of transparency and clear chains of responsibility. The WHO’s six principles for AI governance include promoting human well-being, ensuring transparency and explainability, and fostering responsibility and accountability.

Explainable AI (XAI) is a key requirement for achieving responsible AI implementation. For a high-stakes application like triage, XAI must fulfill three core principles :

Transparency: Providing an understanding of the algorithm's internal mechanism and how data points were processed.

Interpretability: Explaining the meaning of the risk score and the data that led to the conclusion (e.g., showing the patient’s history and lab values that informed the triage level).

Comprehensibility: Tailoring the explanation to the user, ensuring the right level of detail for both the clinician and the patient.

Accountability requires establishing unambiguous chains of responsibility for AI decisions, especially since systems can hallucinate, misinterpret context, or reinforce biases. Organizations must institute robust audit trails and real-time monitoring systems capable of flagging and correcting potentially harmful outputs before they escalate. A strategic measure is the creation of new oversight roles, such as a Chief AI Officer (CAIO) or an AI Ethics Manager, specifically charged with monitoring, reviewing, and being accountable for the performance and ethical standards of the autonomous systems.

The Clinician-AI Trust Triad and Deskilling

The successful integration of autonomous AI rests on trust—a three-way relationship among patients, clinicians, and the AI system. Clinician trust is informed by the system's fairness, transparency, and performance. However, patient trust in the automated system is often compromised by external human factors, specifically the patient’s own understanding of their symptoms and their willingness or ability to provide accurate information through an automated chat interface. The AI’s output is inherently restricted by the quality of the information provided by the patient.

A paramount concern among healthcare professionals is the risk of clinical deskilling and automation bias. Automation bias describes an overreliance on technological tools, potentially leading to human clinicians failing to exercise adequate oversight and overlooking mistakes made by the system. This risk is amplified if the touted productivity gains from AI are immediately translated into higher productivity expectations, removing the dedicated time clinicians need for thoughtful review of AI-generated outputs. If autonomous decisions conflict with the judgment of human staff, clinicians currently report they would consult other medical staff before reconsidering their initial judgment. This indicates a continuing need for human validation and the protection of human autonomy, as mandated by WHO guidelines.

The apprehension over job loss, ethical issues related to system malfunctions, and the distortion of information due to biased data is prevalent among nursing students and existing healthcare professionals. Therefore, autonomous systems must be designed to augment clinical expertise and preserve the fiduciary relationship with the patient, attending to the non-verbal cues and empathetic interactions that AI cannot replicate.

The 2025 Outlook and Strategic Roadmap for Responsible Autonomy

Current Industry Trajectory (2025 Forecast)

The collective focus of global health system leaders in 2025 is primarily on improving operational efficiencies, boosting productivity, and digital optimization under constrained budgets. While Agentic AI is recognized as the "next evolutionary step" in leveraging clinical data , the challenges associated with high-risk regulatory classification (EU AI Act) and the immature state of liability frameworks confirm that the transition to fully autonomous clinical processes will require a multi-year development and governance horizon (2027 and beyond). The immediate forecast validates investment in integrated, hybrid augmentation models over experimental full autonomy.

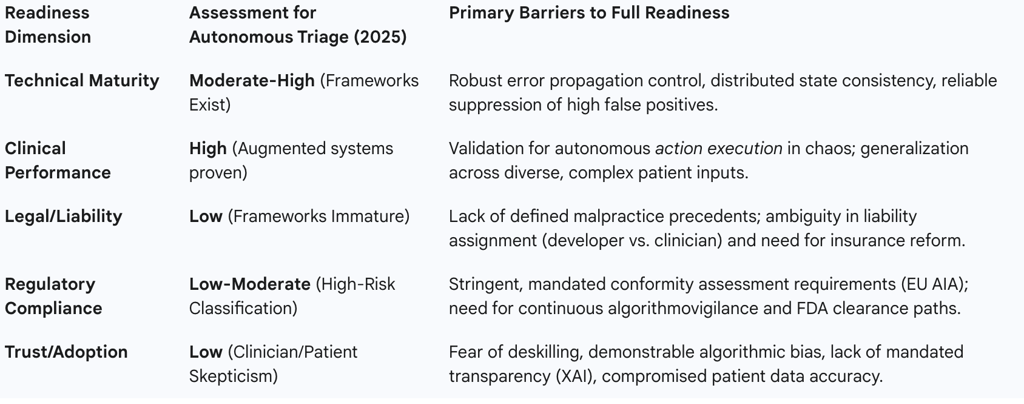

Agentic AI Readiness Matrix for Autonomous Triage (2025)

A synthesized assessment across the four critical vectors reveals that governance and liability deficits, not technical capability, are the limiting factors for 2025 deployment.

Agentic AI Readiness Matrix for Autonomous Triage (2025)

Strategic Roadmap for Responsible Autonomy (2025–2027)

Given the low readiness score across critical governance domains, health systems should adopt a phased, risk-averse strategy focused on building organizational readiness before accepting autonomous risk.

Phase 1 (2025 Focus): Governance and Hybrid Augmentation

The priority for the current year must be the implementation of governance structures and the adoption of agentic systems in mitigated risk environments.

Prioritize Low-Risk Agentic Use Cases: Implement agentic systems for complex coordination challenges in operational and administrative domains where patient safety is not immediately compromised, such as revenue cycle management, supply chain logistics, or non-acute care pathway optimization. These applications allow the organization to stress-test the multi-agent orchestration frameworks (e.g., Agno, CrewAI) and internal monitoring capabilities without life-or-death risk.

Establish Governance and Audit: Institute comprehensive governance frameworks that integrate clinical, technical, and regulatory expertise. A critical step is the establishment of clear responsibility: new leadership roles, such as a Chief AI Officer (CAIO) or AI Ethics Manager, should be appointed to oversee the performance of autonomous functions, monitor decision-making processes, and manage the necessary audit trails required for accountability.

Mandate Algorithmovigilance: Continuous monitoring systems must be implemented immediately to track individual agent performance and multi-agent coordination effectiveness. This includes proactive and ongoing evaluation to identify, track, and debias against performance shifts, ensuring compliance with equity principles and preventing the cascading of errors through the workflow.

Phase 2 (2026–2027 Focus): High-Fidelity Hybrid Triage

Once operational maturity and governance confidence are achieved in low-risk domains, the focus can shift to robust augmentation within the triage setting.

Develop Explainable AI (XAI) Infrastructure: All triage decision-support tools must meet high standards for transparency and interpretability. The XAI layer must be capable of providing "meaningful" and accurate explanations tailored to the clinician, demonstrating the real reason behind the decision rather than a misleading signal.

Invest in Data Equity and Diversity: Health systems must proactively address the root causes of algorithmic bias by dedicating resources to integrate diverse data sets, including comprehensive Social Determinants of Health (SDoH) data, to ensure that the models generalize accurately and equitably across all demographic and socioeconomic groups.

Legal and Policy Advocacy: Proactive engagement with medical liability carriers, professional organizations, and legislative bodies is required to push for the AMA’s position that liability for autonomous system failure rests with the developer. This advocacy aims to secure clear indemnification for clinical users and expedite the necessary insurance reform required to safely scale these technologies.

Conclusion and Final Recommendation

The technical potential of Agentic AI to transform complex clinical coordination is undeniable, offering a path to overcome the inherent cognitive limitations of human triage in high-stress settings. However, autonomous patient prioritization requires a level of safety, ethical assurance, and legal clarity that simply does not exist in 2025.

The combination of the high-risk regulatory classification (EU AIA), the unresolved liability allocation for system failure, the high probability of systemic error propagation in multi-agent architectures, and the entrenched risk of algorithmic bias leading to health inequity, renders full autonomy in acute triage an unacceptable organizational risk for the immediate future.

The final recommendation is to strictly limit the use of Agentic AI through 2027 to augmentation roles and non-clinical coordination tasks. Investment should be strategically focused on strengthening the foundation of responsible AI: establishing governance, achieving robust error resilience, and mandating algorithmic equity through continuous monitoring. Only when external legal and regulatory bodies provide clear, codified frameworks for liability and accountability can health systems safely migrate from intelligent augmentation to autonomous prioritization.